Learning and Exploring Motor Skills with Spacetime Bounds

Eurographics 2021

Abstract

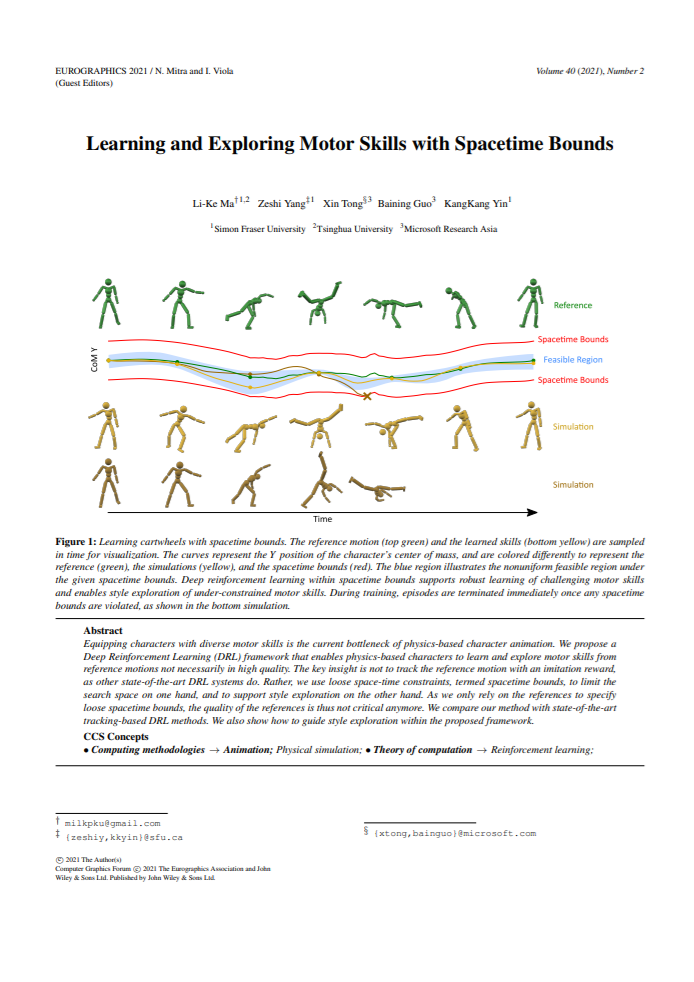

Equipping characters with diverse motor skills is the current bottleneck of physics-based character animation. We propose a Deep Reinforcement Learning (DRL) framework that enables physics-based characters to learn and explore motor skills from reference motions not necessarily in high quality. The key insight is not to track the reference motion with an imitation reward, as other state-of-the-art DRL systems do. Rather, we use loose space-time constraints, termed spacetime bounds, to limit the search space on one hand, and to support style exploration on the other hand. As we only rely on the references to specify loose spacetime bounds, the quality of the references is thus not critical anymore. We compare our method with state-of-the-art tracking-based DRL methods. We also show how to guide style exploration within the proposed framework.